decamp: how to let AI build a tiny webapp that works

By july •

When working with AI coding tools, it’s crucial to plan before diving into implementation. Through our recent experience converting Campsite data exports, we discovered that providing comprehensive context to AI models leads to significantly better results. This post details our workflow using Gemini for planning, Bolt for initial implementation, and Cursor for refinement.

the problem: Campsite exports are unreadable

Campsite is a app for teams to communicate and we’ve really enjoyed using it at STL. Unfortunately, they announced that they are shutting down in February 2025.

They have a data export functionality, but it’s hard to actually get to the contents:

- Folders use IDs instead of meaningful names

- The post body + comments are in a JSON object and not rendered properly.

$ tree ./campsite-export

├── channels

│ ├── 373dvggke0zm

│ │ └── channel.json

│ ├── 5abbvaug7sco

│ │ ├── channel.json

│ │ └── posts

│ │ ├── 13zry38af46g

│ │ │ └── post.json

│ │ ├── 3zs3r1hbdr8w

│ │ │ └── post.json

│ │ ...

│ ...

└── users.json

66 directories, 64 filesAs a third issue, it’s all different files, while in today’s AI-first world, where context is everything, we want to have a single file to easily dump in the context window of an LLM so that we can get insights from our Campsite communications down the road.

We wanted to convert this nested JSON .zip into readable Markdown files that are easy to traverse. We could’ve written a Python script to do that, but figured we might as well make a tiny webapp so that other Campsite users could benefit from it too!

We didn’t want to spend a lot of time on it and the functionality is relatively simple: so it’s a clear use-case for AI coding tools.

outcome-based AI coding

The premise of AI coding tools like Bolt and Cursor is that they allow you to think more about the desired output, and less about how you’re gonna get there (they’ll figure that out).

However, diving into implementation right away will often lead to the AI getting stuck in some partial implementation without properly having thought out the overall structure.

Context is everything for LLMs, and this holds true for these AI coding tools as well. The more context we provide it up-front, the better it will perform implementing a solution.

So whenever we’ve wanted to build a webapp or a larger feature, we’ve begun to approach it by first writing down a proper description of the functionality, and making sure that this tech spec is complete before starting implementation. This is an explicit choice, because the design of tools like Cursor makes it easy to start generating code straight away. (Which is not what you want!)

For decamp, we had three rough stages: first, we planned using a Gemini conversation, then we created an initial implementation with Bolt, and then we made tweaks and polished using Cursor.

planning stage: go from rough description to tech spec

If you take away one thing from reading this, it should be this: take the time to write a properly defined plan for the feature you want to implement.

The goal is to let Gemini (or another high-intelligence model) write a precise tech spec based on a rough description of what you want.

We created a new Gemini conversation where we provided it with:

-

The folder structure of the Campsite export, including the contents of different types of files (channel.json, post.json, and users.json).

-

bullet points describing what the webapp should do (e.g. take a .zip folder and return output files; describe desired output structure; only process client-side)

-

what the desired output format in markdown would look like

## <Post Title> [resolved] by <User Display Name> on YYYY-MM-DD resolved on: YYYY-MM-DD: <Resolution Comment> (skip if not resolved) <Post Description (replace <@user_id> with display names)> ### comments #### [resolved] <User Display Name> (YYYY-MM-DD HH:MM): <Comment Body (replace <@user_id> with display names)> - [reply] <User Display Name> at YYYY-MM-DD HH:MM: <Reply Body (replace <@user_id> with display names)> ... #### ... second comment ...

We didn’t know if processing/creating a bunch of markdown files was actually possible to do purely client-side, so we asked Gemini if it was possible, and if so, to write a tech spec / plan of attack.

The contents of the input .json files were quite lengthy, so in order to provide a more concise prompt for the AI code generators, we let Gemini infer types and include those in the tech spec; that way, Bolt and Cursor knew what input data they were working with without having to parse long ass jsons.

One thing we didn’t do but definitely would be good: ask Gemini to ask clarifying questions, and include your answers in the creation of the tech spec! This will help identify gaps in the description of the functionality you gave.

initial implementation: a Bolt one-shot

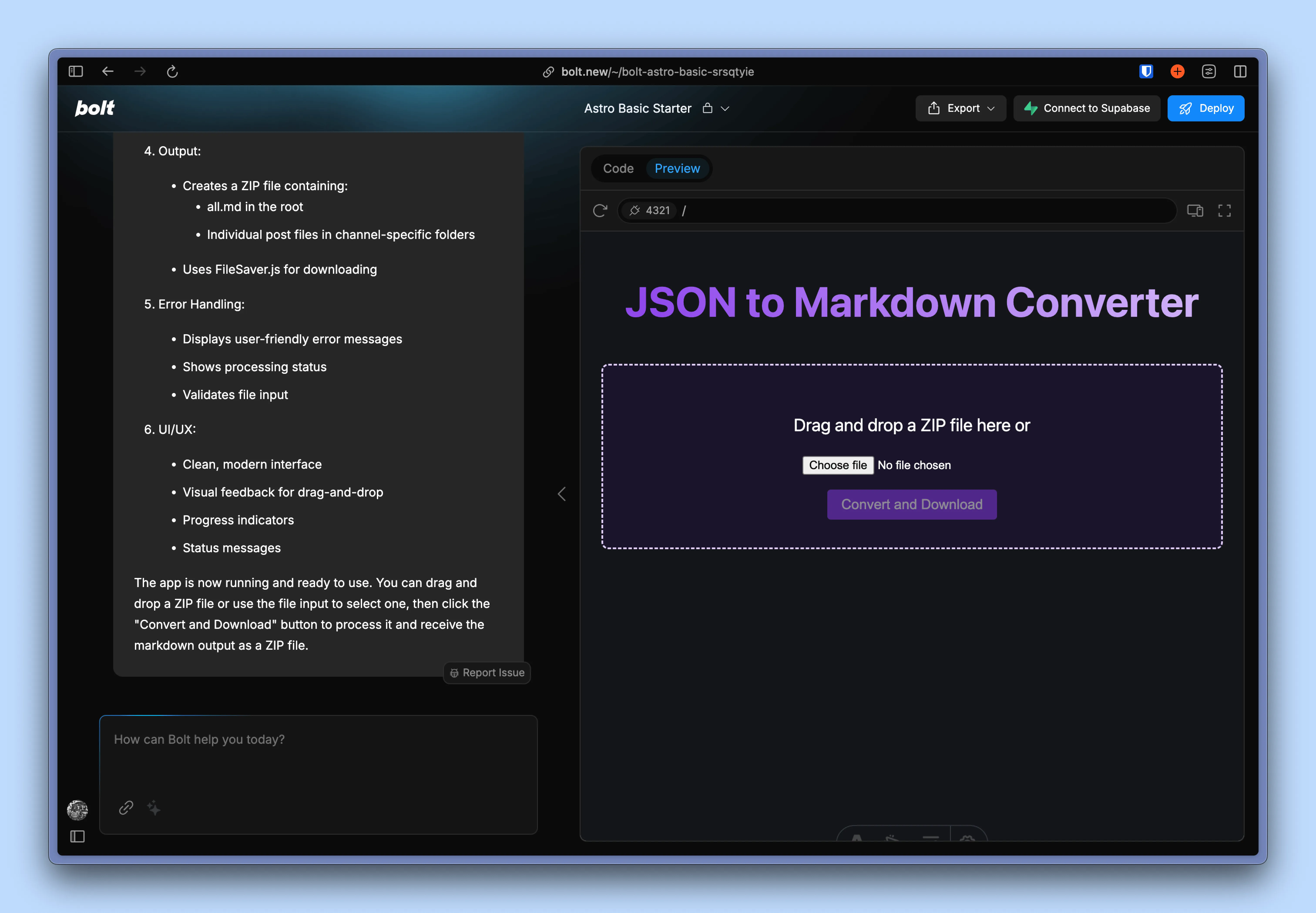

Then, we went to https://bolt.new, created an empty astro starter, and pasted the tech spec in the chat.

Amazingly, Bolt one-shotted a fully working version! While this is definitely an easy case (it could write all required JS in one inline script), it would’ve probably taken a bunch of iterations if we hadn’t done the pre-work of consolidating the rough idea + input data samples + desired output format into a concentrated tech spec.

In the process of trying it out we found some small things we wanted to change; and the style was definitely giving “I’ve been generated by AI”.

Rather than trying to fix these in Bolt (in which it’s hard to target incremental changes), we downloaded the codebase and opened it up in Cursor for final refinements.

polishing with Cursor

In Cursor, we made some quick improvements to get everything just right. We tweaked the output format a bit, fixed how it handles images, and added an example of the Markdown output.

We also made some manual tweaks to the styling and appearance. (so, decamp still has some human coding involved!)

With everything polished up, we deployed to Firebase (our go-to solution for free static hosting), connected it to https://decamp.stl.dev, and we were up and running.

conclusion

To get the most out of AI coding tools, it’s essential to not start implementing straight away, but to plan first. This seems to be what others are discovering too. An additional advantage is that we could leverage Gemini’s knowledge on e.g. what JS libraries to use for file processing to check if the idea is possible in the first place.

Using Bolt to create the initial project setup and Cursor for refinements works very well. While Bolt can scaffold a whole project better than Cursor, we found that it performed poorly with follow-up prompts (it actually deleted essential code it had previously written).

For styling, you still need to do some manual judgment. We haven’t found a way to integrate design-oriented AI coding tools like v0 yet, in this case some manual polishing was all we needed.

If you’re curious, you can check out the final code (and the generated tech spec) in our Github repo. And if you’re a Campsite user, try out the tool and let us know what you think!